Neuroscience/AI - Can rats ‘think’? A paper by Chongxi Lai and colleagues on cognitive teleportation in rats [8 min read]

Chongxi Lai’s thesis work, Jedi rats, and brain-machine interfaces.

I haven’t written anything publically on neuroscience since before I entered government in early 2020. But a paper has just come out on Biorxiv that is the result of a project I’ve watched closely since its early days, and is one of the most impressive pieces of work I have ever seen in neuroscience. So I thought I’d write a short blog on the paper.

The paper is titled ‘Mental navigation and telekinesis with a hippocampal map-based brain-machine interface’ by Chongxi Lai, Shinsuke Tanaka, Timothy Harris, and Albert Lee.

I was at graduate school with Chongxi, the first author [twitter: @chongxi_lai]. I first remember discussing neuroscience with him by a lake on a student retreat, I think in 2015/6. I quickly thought he was probably the smartest and most creative neuroscientist that I’d met in my generation, along with someone I’d met in the UK.

Anyway, it was at the lake side chat that I first heard from him some of his early thinking on the project which he conceptualised and designed, and with his collaborators brought to fruition in this new paper.

Chongxi’s thoughts, and the resulting paper, pushed the frontiers of what we think of as cognition in rodents, and provide access to brain signals that could allow new ways for humans to interact with machines. I’ll summarise the paper here, and leave these broader implications and possibilities for Chongxi to explain later. Suffice to say I think this technology has amazing potential to be the focus of a metascience institutional experiment.

Image generated by DALLE

Probing cognition in animals

At a high level, Chongxi wanted to probe whether rats could think.

In the last century many scientists still argued rats were little more than simple stimulus-response systems. When I was an undergraduate in 2010, primate researchers still claimed rats lack ‘cognition’, along similar lines (there is a species chauvinism in experimental neuroscience…).

Its true rat brains are far smaller than ours, roughly the size of our thumb tips.

But, size aside, their brains are remarkably similar to ours. It takes a trained eye to tell rodent and primate brain tissue apart under the microscope. So perhaps in that tiny brain there lies something we might recognise as ‘cognition’ or ‘thinking’, even if, analogously, it is a GPT-2 in comparison to our GPT-6 brains.

Neuroscientists have previously decoded signals from rodent brains that are not purely responses to sensation or commands to move. One such example is the famous place cells in the hippocampus. These place cells are neurons that are active when a rodent is in a particular location. You can work out where an animal is by recording their place cell activity.

But knowing where you are doesn’t meet the intuitive bar of ‘thinking’ for most people, with its connotations of intention and imagination beyond the immediate situation. Similar is also true for the signals picked up by modern brain computer interfaces - they access output-side systems, like a command to move an arm. It's very impressive seeing someone move a robotic arm with their brain activity, but these systems don’t access the core of what we think of as thinking.

How to access ‘thinking’? A key difficulty with animals and experimental neuroscience is that you cannot tell them what you want them to think of or do. You have to give them nudges, like rewarding them for performing a particular action. This ‘behavioural training’ is as much art as a science. Certainly, no one found a way to make rats have reliable abstract thoughts. So it’s very difficult to assess what rodents are ‘thinking of’, if anything, and probe it experimentally.

Chongxi’s teleporting rat experiment, starting with rats in the metaverse

This is where Chongxi’s brilliant experimental design came in. When he first described it to me it was like something from far in the future, a giant conceptual leap that would require advances in technology alongside discoveries, combined with several conceptual insights, to achieve. I’d never heard a neuroscientist, of any age, speak with such combined ambition and ingenuity of approach.

Here is what the paper shows.

First, they focussed on studying the place cells in the brain that become active when a rat is in a particular location. The area containing them, the hippocampus, isn’t just a map of space. It had been speculated for a long time to contain a ‘cognitive map’ (Tollman 1948), representing the abstract territory of thought. Others like Demis Hassabis had also shown the region is vital for imagining the future, with patients with major hippocampal damage unable to imagine new experiences [Hassabis et al 2007].

Chongxi speculated that if rats can indeed ‘think’ and imagine, you should be able to record place cells becoming active for future locations they want to go to, in a predictable way.

How to do this?

First, they put rats into virtual reality, translating the movements of the rat into movements in the virtual world. This lets them create arbitrary worlds for the rats to explore.

An example of a rodent (a mouse in this case) navigating in a virtual reality setup similar to the one used by Lai et al. Source Lukas Fischer, eLife

The rats quickly learn that reaching particular targets brings reward, and they move toward those targets when they appear. Let's say the rats view these targets as ‘goals’. These goals change trial by trial: The rats see a virtual reality goal like this:

The rats can do this very well and learn it quickly.

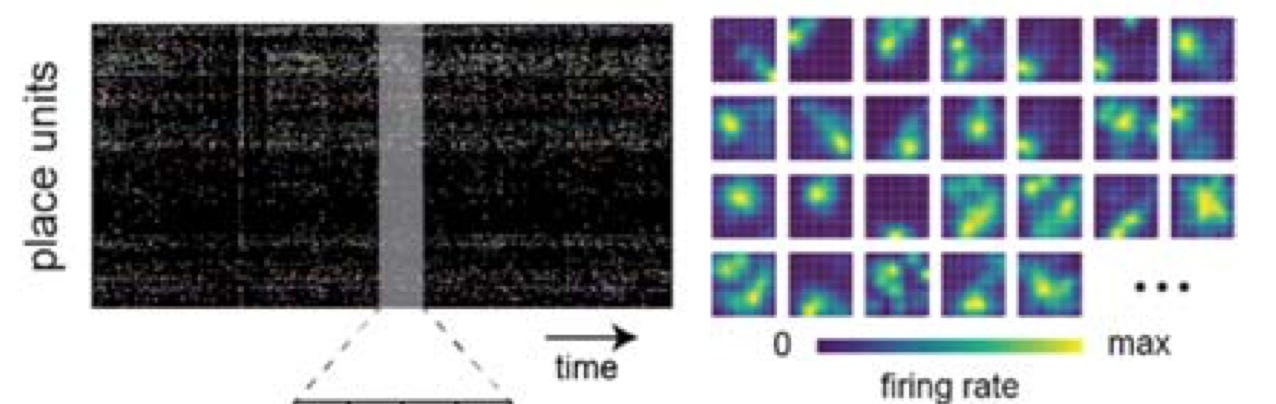

Whilst the rats do this task and collect rewards, the researchers are recording from brain cells in the hippocampus, where the rat’s place cells are. On the left are examples of neurons over time, with each white ‘dot’ being a neuron becoming active, and on the right are the neurons spike over location - you can see they prefer particular locations. Figures from herein are all from Chongxi’s paper.

You can then use this activity to teach an artificial intelligence (neural network) to decode the location of the rat in virtual reality from the neural activity. So we have an artificial neural network decoding the activity of a biological neural network to work out where the rat believes they are in the virtual reality task.

So the researchers record brain activity and use it to work out where an animal is in virtual reality. This may sound futuristic, but so far we are in known territory for modern circuit neuroscience.

From navigating to thinking

How to go from this to ‘thinking’?

The rats are navigating in a virtual reality environment and, as said above, they are rewarded when they move to the goal area.

Chongxi realised that if he could record enough neurons at the same time, and develop an algorithm to decode the animals location from its brain cells in real time (rather than in later analysis), he could change the task in a subtle but brilliant way: he could reward the rat not for where they actually moved to in virtual reality by moving their legs, but to where their brain signals indicate they are. Ie, he could reward the rats for imagining they are in a particular location, without requiring them to actually move there.

This required a whole host of technical advances from when the project was conceived, and Chongxi and collaborators solved them all over a period of more than 8 years. It’s a great example of the benefits of combining engineering and discovery side by side for sustained time periods in a way that is very challenging to do in conventional research, creating ‘cycles of discovery and invention’. When he started the project Chongxi bet on technology enabling recording of an order of magnitude more neurons at once than when he started, and an ability to decode what that means in just 1 millisecond, rather than hours. The team achieved all of this, enabling Chongxi to create the new kind of brain machine interface used here. Chongxi skated to where the puck was going, not where it was, readying other key technologies. I remember many evenings having dinner with Chongxi and Shinsuke describing the latest challenges in their path to solving this….

So, how do they show rats thinking? The team did this in two different ways.

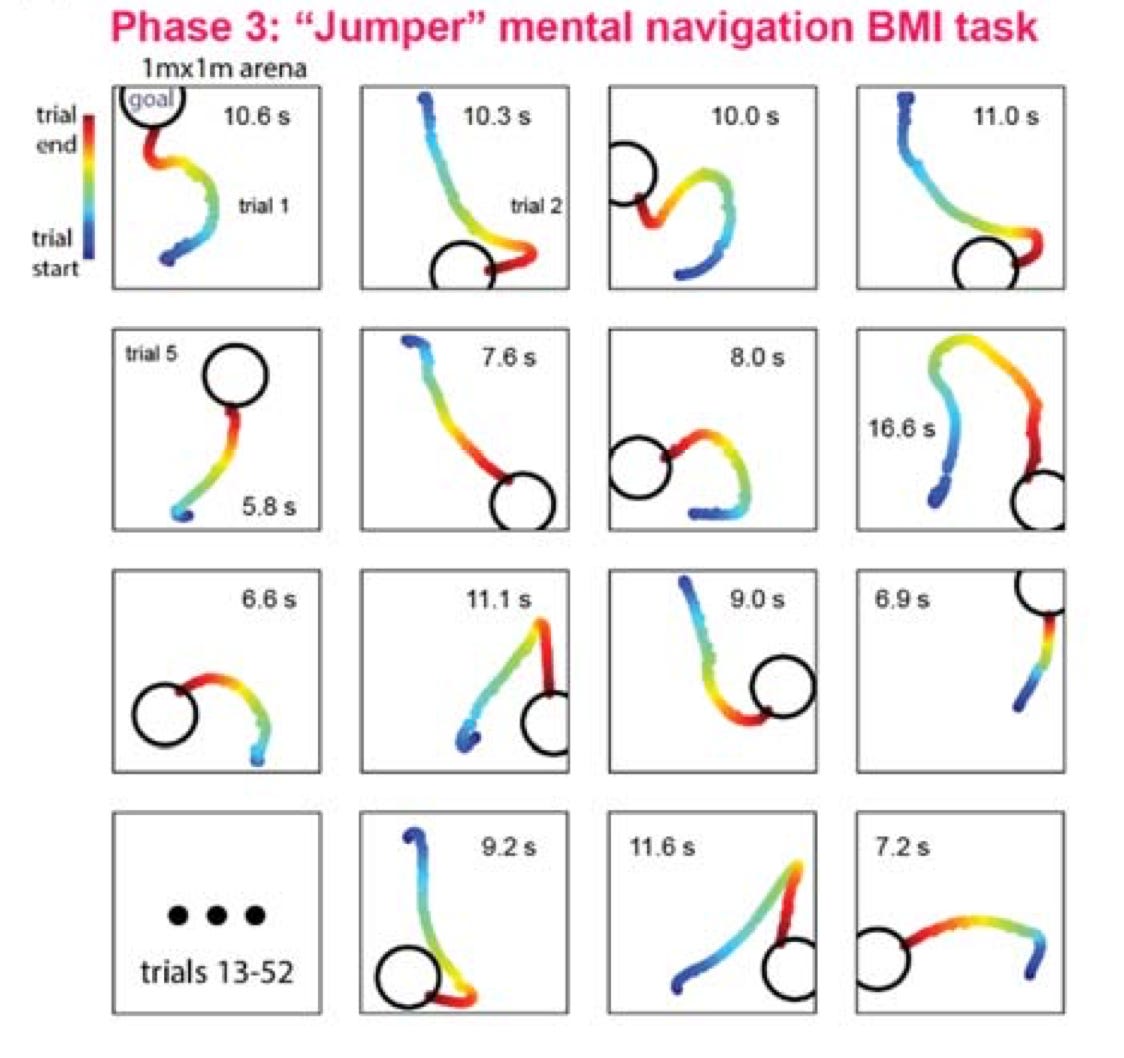

In the first task, known as the ‘jumper task’, the rat is rewarded when its brain activity is decoded as being at the goal area. The virtual reality is updated not based on any movement of the rat, but by the decoded brain location. Its basically navigating the metaverse based only on your brain signals.

Amazingly, the rats can do this very well, moving their imagined brain activity toward the goal, with performance much higher than chance despite low statistical power (these are hard experiments to do), and not far from normal performance. Whilst the rats also walk to the goals in the early trials, they quickly seem to learn they don’t need to move, and on many trials where they successfully ‘teleport’, they do not move at all. Rats must be lazy and prefer not moving!

Here are plots of the rats ‘decoded location’, and you can see it moves steadily toward the goal:

Again, these movement trajectories are solely based on brain activity.

Here is an example of a comparison between a ‘running’ task, where they move physically, and a trial where they do it mentally. The brain activity is below. Whilst there is less activity, nonetheless its enough for the decoder to work out where the animal is imagining:

Now to the next task. In the task above, the virtual reality still updates - it just updates based on brain activity, not the rats movement.

In the next task, they do something different. They keep the virtual reality fixed, and instead an object moves toward the goal based on the brain activity. So the rat has the impression it is staying still. This they call the ‘Jedi’ task, as its like moving an object with the force, except rather than the ‘star wars force’, the force is the brain activity of the rat.

The rats can still do this, with their imagined brain activity moving to the goal location. The rats are fixed at the + in the middle of the plots below, and you can see that for the trials the decoded location usually, though not always, centers on the goal.

Like most great papers, it’s very simple - just two tasks, though that simplicity hides a remarkable range of advances needed to show this. This paper is to me the most convincing demonstration of cognition in rodents, and more importantly long term, it provides a way to access abstract computational signals in the brain. It's not difficult to imagine how useful this might be for someone who is paralysed.

In short, Chongxi successfully devised an experiment to probe the limits of cognition beyond that which had been pushed in any species, and he did it in rats, a species many considered not to have cognition at all.

I think there are incredible opportunities here…..