S&T - Is the UK a world leader in science?

With Professor Paul Nightingale - Looking at the top 100 papers in different priority tech fields to assess the UK position at the cutting edge

Update - This blog was the focus of an article in the Financial Times, 14th March 2023, ‘World-leading? Britain’s science sector has some way to go’ by Anjana Ahuja, and also in the Times Higher Education Supplement 30th March.

To view the pdf version of this doc, which includes the annex table, click below:

Is the UK a world leader in science?

Professor Paul Nightingale, Science Policy Research Unit, University of Sussex Business School, University of Sussex

Dr James W. Phillips, Honorary Senior Research Fellow at Department of Science, Technology, Engineering and Public Policy (STEaPP), UCL

TLDR:

Many UK policy debates assume the UK is a world leader in science, and that the major problem the UK faces is in translating its world leading research into products.

Commonly used metrics support this view of world leadership, with the UK accounting for 13% of the 1% most highly cited work across all research fields.

However, this measure is potentially misleading as focusing on the top 1% tells us little about UK performance at the absolute cutting edge of science, nor about the distribution across priority v non-priority areas.

When examining the most cutting edge work across the three priority technology areas from the UK Integrated Review, we find the UK is usually only present on around 3-7% of those advances, around a quarter to half the level you would expect from the commonly used measures supporting a Science Superpower narrative.

Further, in some cases this lead depends on a tiny number of atypical organisations like the Cambridge Laboratory of Molecular Biology (LMB) and DeepMind.

For example, without DeepMind the UK’s share of the citations amongst the top 100 recent AI papers drops from 7.84% to just 1.86%, whilst we are only present on one of the biggest 27 synthetic biology advances of the past decade, which itself came from the Cambridge LMB. In every case examined, a single institution such as MIT or DeepMind could be found that matched or exceeded the entire UK academic performance.

These early results indicate that the UK is good, but not outstanding, in priority areas of science and technology. It is clearly not as good as assumed by the most commonly used statistics.

These findings suggest a need for both increased investment and substantial reform in our approach to what we fund and how we fund and conduct cutting edge science. Excellent science is a critical anchor for the UK’s broader science and technology ecosystem, and our results question the widely held view that the UK is exceptional at invention but somehow weak at innovation. Rather, the UK is doing very well given low investment, but questions remain about whether we are doing well enough.

These results are preliminary, and are designed to start a debate about the levels, focus and nature of science funding in the UK. We strongly encourage constructive criticism and improvement of this argument.

Note - Zeta Alpha, an AI data analytics company, very kindly contributed AI analysis to this pro bono. This week they published a fuller version of the AI analysis that they did for us with a more international perspective. Read it here.

An erratum correction was made on the 16th May 2023, see endnotes for details. This does not alter any conclusions.

Image generated by DALLE, with a prompt of ‘scientists running a race to make new inventions’.

Introduction

Science, technology and economic policy discussions in the UK almost always assume the UK has a world-class science base and is, or is on the way, to being a Science Superpower. This implies it is punching substantially above its weight.

The Prime Minister gave a speech recently about the UK’s future with a focus on innovation and making the UK a “beacon of science, technology, and enterprise”. It reiterated a familiar position that the UK is excellent at research, but needs to be better at commercialisation and innovation. And the March 2023 government National Science and Technology framework says "The UK’s foundational science base is world-leading and broad, giving us the agility to rapidly advance discoveries and technologies as they emerge."

This usually sets up a puzzle: why is the UK struggling to commercialise its brilliant and world leading discovery science?

Here we consider an alternative interpretation: that whilst the UK is good at science, it is not reliably world leading. Moreover, it is quite far from the cutting edge in some of the most critical technology areas highlighted by the government itself. This is important, as there are broad and strong first mover advantages in technology, and the most cutting edge work is often particularly attractive to internationally mobile talent.

While there is a lot of talk in the UK about how world leading our science system is, in our experience that view is not always shared internationally, or by UK research policy experts and researchers who have worked in top US institutions and ecosystems such as those in Boston and California.

In private we pick up substantial concern that the UK is actually falling behind in early stage research, with some suggesting we are at least 5 years behind the cutting edge in some key fields such as synthetic biology. We believe this is reflected in the UK’s COVID R&D, where it excelled in large and high quality clinical trials as well as in exceptional deployment, but did not pioneer new technology1.

Whilst we certainly need to make improvements in areas around research spinouts, translation and higher technology readiness level research, there is also a need for substantial attention, increased investment, and reform at earlier stages in the research system.

This is not a formal academic paper, nor is it intended to be definitive. It is more of a provocation to promote debate based on initial and rough and ready analysis. We strongly welcome suggestions as to what we may have missed or how our analysis may be misleading.

If the arguments in part 1 are familiar to you, you can skip to part 2 without loss of understanding.

Part 1 - Analysing the existing basis of claims of world leadership in science and tech

We argue the UK narrative of world leadership in science and technology has arisen in part from a number of things:

Its past history of science and technology leadership, including its leadership in Nobel prizes. However, this is a very strongly lagging measure in multiple ways, usually reflecting research that is decades old.

A conflation of having highly ranked universities with having world leading research - University rankings do not directly translate into research rankings.

A focus on relatively bulk publication measures, rather than a focus on the rare ‘outlier’ results that are of world changing significance, such as the discovery of CRISPR, the double helix, or Transformer Nets.

This final point is the one we will cover in detail throughout this piece.

To begin we will scrutinise the data typically presented for showing that the UK is a world leader in Science and Technology, and highlight its limitations.

The first of these is the results of the 7 yearly Research Excellence Framework (REF) exercise. This is widely advertised to show the world class nature of UK Research, and is used to allocate resources. The Russell Group, for example, suggests that the REF results show 94% of their research is world leading or internationally excellent.

We think this is misleading, as only a subset of UK research is submitted to the REF, and it is selected to be the best. Claiming it is representative of the average output is a bit like extrapolating the fitness of the average person from the number of medals we win in the Olympics. Further, the REF exercise is primarily assessed by UK academics themselves, and while they are experts, it remains primarily a national self assessment exercise of the UK reviewing its own work, and there is an obvious incentive to boost their own field of research. The amount of ‘world leading research’ has also very substantially risen over time, suggesting grade inflation. Such a UK-nly exercise may be useful in capturing the assessments of UK academics about the relative performance of UK institutions, without explicitly comparing UK research against international research (ideally blinded and with international assessors), its hard to see it can say much about international performance.

Now lets turn to the claim that the UK performs extremely strongly in terms of its publication outputs relative to its international peers.

The plots in this section are all from 2022 UK government data which government uses to assess its international standing2.

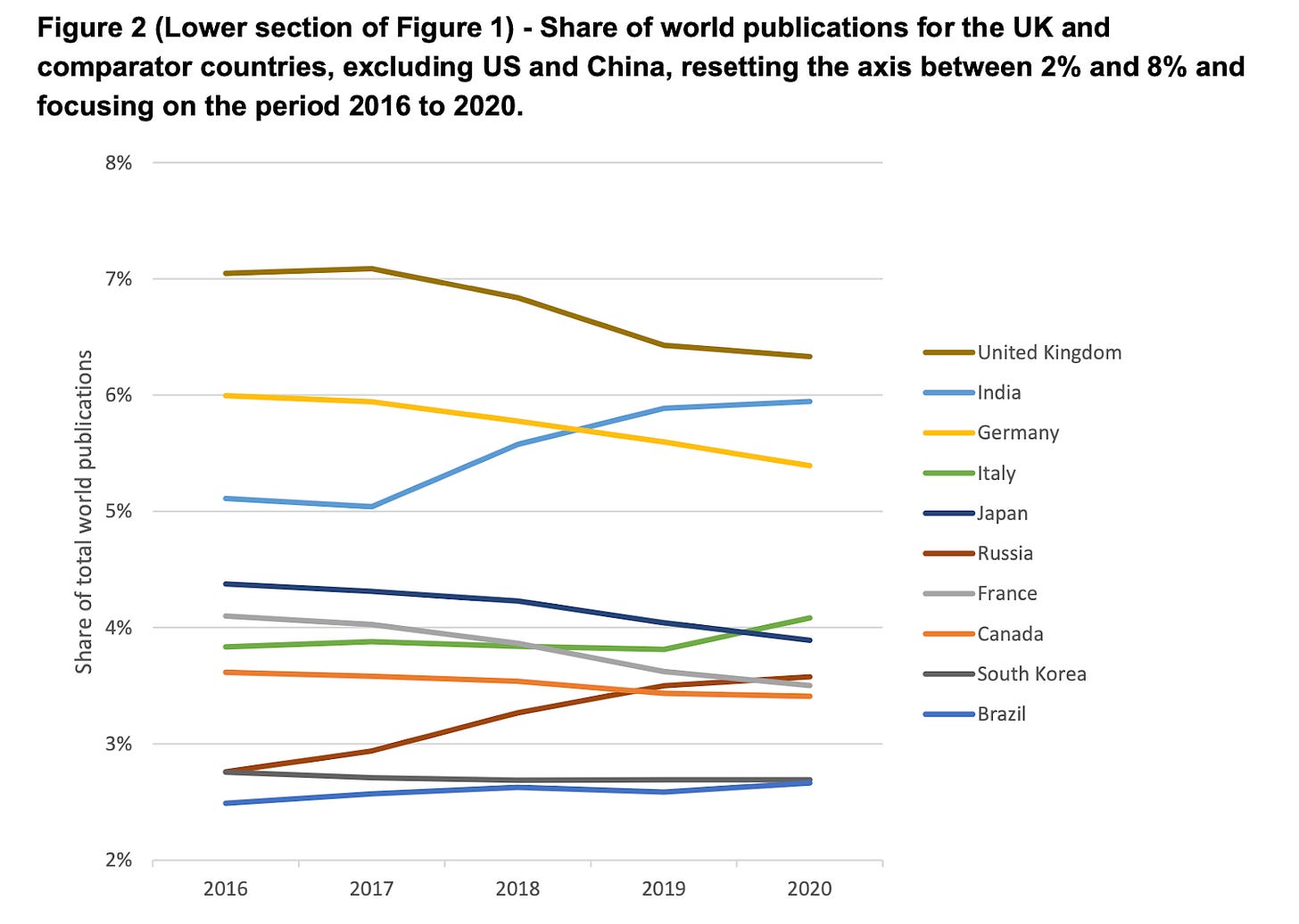

We can see, for example, that the UK is the third largest nation in the world by number of academic publications per year (the plot excludes US and China, and suggests we may soon been overtaken by India):

However, the number of papers published is not sufficient or even particularly useful to assess whether a nation’s science is world leading without looking at the quality of the outputs.

When we factor in quality the UK picture actually appears to further improve. We can do this by filtering the top 1% most cited papers across all fields. Citations measure the number of times other academics refer to the research papers, and can be taken as a proxy for academic impact, and in some instances quality. The UK is approximately 30% better than next in class Germany and Italy as of 2020 (this data again excludes the US and China).

This plot shows around a 13.5% share of the most highly cited publications for the UK, roughly 100% better than an average of similar competitors such as Germany, Canada, France, and Italy, and far higher than Japan and South Korea.

On the surface this would appear to strongly support the claim that the UK is a world leader in science and tech. However, while this ‘13% of top papers’ suggests we are very good at research overall, it is a potentially misleading basis for claiming the UK is at the cutting edge of science and technology.

It can partly be explained by the areas that the UK is good in. When we filter by Field Weighted Citation score, the UK’s leadership is much less clear cut, being roughly equal with Italy, Canada, and the US and Germany slightly behind. The Field Weighted Citation score takes into account that different areas have different citation patterns and compares the number of citations a paper gets compared to the average number of citations you would expect for a paper in that field. On this measure, the UK is only marginally better, on average across all fields per published paper, than its main competitors, and neck and neck with Italy.

Clearly, such a measure cannot be used to define a country as a science superpower unless Italy and Canada also are.

The above, so far as we can discern, is roughly the collected basis for claiming that the UK is a science superpower: its academic papers on average do better, but only moderately, than competitors. The numbers above are used a lot, we suspect in part because they are the analysis approach which gives the strongest impression of UK Research.

However, a lot of relevant detail is being missed here, and arguably the data is not assessing the most important factors. As we shall see below, this 13.5% of the top 1% papers figure does not accurately reflect performance at the edge of the art.

To understand this blog’s core point it is critical to understand that research is an immensely varied exercise, arguably more varied than any other field of policy. Inventing a new gene editing method, producing a new interpretation of a historical document, developing a new algorithm for a computer, improving a chemical dye, measuring an unusual bird population on a remote island, and finding a new lifestyle correlation for a type of cancer are all research, and would show up in these results. Yet such research varies enormously in the talent types, research resources, intellectual freedom, commercial connectivity, and timescale of support needed to conduct it. For example, a research system can be very good at some things, but a non player in expensive, highly risky technology development that could seed new tech companies and industries,

Based on this, there are a number of reasons to want to interrogate the claim from the data above much more deeply.

Firstly, the funding that follows evaluation exercises such as the Research Excellence Framework (REF) in the UK system strongly incentivises academics to publish papers that will be marked highly by the REF panels. The UK is therefore optimising particularly directly for the metrics it is assessing itself by. This is loosely analogous to ‘teaching to the test’. This has both positive and negative impacts. It encourages a very efficient system that generates more outputs of the kind that are rewarded by the exercise. However, it is biassed against interdisciplinary research (see Rafols et al 2012) and while it encourages nationally and internationally excellent research, there are no extra benefits for doing the most demanding, genuinely disruptive, highly influential work that will have historical significance. It rewards research that is regarded as good now, not research that is regarded as truly exceptional today or that can be regarded as game changing in a 100 years. See, for example, the points made recently in Nature on truly disruptive work3.

Secondly, and relatedly, the measures above will also not capture the most cutting-edge research, which have the biggest impact, such as the discovery of CRISPR. Given the size of the global science system, there is a lot of research in the top 1% most cited papers. By one 2015 estimate, there are around 1.8 million academic papers published every year in academic research. The top 1% of 1.8 million papers is still 18,000 papers, most of which are unlikely to have a transformative impact on the world. Those papers get the same reward and recognition as truly world-transforming research in the graph above.

We will now turn to look at the performance of the at the real cutting edge of science.

Part 2 - Outlier success analysis in priority strategic technology areas

We therefore need alternative ways to assess whether the UK is at the cutting edge.

It is often not possible to reliably pick out the most important work ‘at the time’, as its importance is sometimes only recognised much later. However, we can look at the extremely influential papers in key fields at a given time, and assume that is informative about future outcomes whilst keeping the above caveat in mind.

Simply assessing the top 1% of cited papers, as is done above, is a relatively unambitious and unselective measure. In a major field like Synthetic Biology, it may include thousands of papers. However, there may be only a dozen papers at most that really shift the dial each year.

Instead of the top 1% cited papers, we can look for the most highly cited papers in key areas, say the top 100 most highly cited, and assess whether the UK is present there.

In taking this approach we make a core assumption: that high-ranking results in science and technology have a disproportionate impact on the field. For example, 2 papers scoring 5/10 on a ranking are not equivalent to having one paper at 10/10. Put differently, having one paper solving the structure of the Double Helix, or inventing AlphaFold, is relatively more valuable than having 10 other papers with cumulatively similar citation impact. This assumption can be taken further, namely with the view that outlier results are the principal driver of progress in S&T, for example as covered by Michael Nielsen and Kanjun Qiu in their 2022 essay.

Whilst this assumption is not robustly proven, we think it is relatively uncontroversial that a science superpower nation should be expected to reliably produce such high impact results at a much higher rate than ‘non science superpower’ nations, especially when the metric used - academic papers - is the supposed greatest strength of the UK’s R&D system relative to other countries.

Given we are interested in the UK’s future economic performance and technological advantage, we will focus on critical priority technology families that have strong potential to lead to new products, firms, industries, and capabilities.

We have taken the three priority technologies from the 2021 UK National Integrated Review, a once-in-a-generation outlining of UK National Strategy across multiple domains. Pillar 1 of this strategy beginning page 35, titled ‘Sustaining strategic advantage through science and technology’, committed the UK to achieving leadership in Artificial Intelligence, Quantum Technology, and Engineering Biology, as a keystone of economic and national security. They are predominantly, though not entirely, relatively resource-intensive areas of research, and areas of research in which there is fierce global competition for talent. We would argue that it is this kind of research that a ‘science superpower’ should be particularly, though far from exclusively, benchmarked against.

Our analysis is preliminary and necessarily messy - further methodology is in the footnotes4. For ease of reading, we have placed much of the data into footnotes - if you want to see raw counts, please look there.

All analysis is looking at the past 10 years (2013-2022), unless otherwise stated, using paper citation counts to rank papers.

Synthetic Biology

We use the search phrase ‘synthetic biology’ as it is the most broadly used term5, and look at data from the Web of Science over the last 10 years.

Amongst the 100 most cited, If we count a paper with even a single UK author on it, the results appear very respectable, with 22 in the top 100 papers6. To get a sense of US dominance, they have authors on 10 of the top 10 papers, 14 of the top 20, 39 of the top 50 and 74 of the top 100. Or put another way, on this crude data, the entire UK is about the same as a single US university - MIT - which has 8 in the top 50 and 20 in the top 100.

However, these are not necessarily primarily UK papers - they are papers with a co-author from the UK. For example, a paper might count as UK based if it has a single author out of 20 being in the UK, such as a visiting student or fellow. In examining the data we got a strong impression that this was driving the apparently very strong performance.

Therefore, To get a better sense of the UK contribution we added up the fractional contribution of UK authors.7 A paper with half its authors being UK affiliated would count 50%. Adding the contributions up, the UK contributes about a sixth of a paper to the top 10, two sixths to the top 20 papers, and 1 to the top 30 (3.33%). UK authors contribute just over 3.5 papers worth of co-authors to the top 50 papers - about half what we might expect from the commonly used metric that we contribute 13% of the most important work.8

Some of these papers are datasets, software packages and broad reviews of research. So they may be highly cited but may not be at the absolute cutting edge of discovery research. We can further filter our data to look at the most impactful papers, as judged by those which are judged as cutting edge at the time of publication by being admitted to Nature, Science, and Cell9. This is an imperfect but nonetheless informative indicator. Of the top 100 papers by citation the US has 85 papers with US coauthors. The UK has 9 papers with UK coauthors.

Of these papers, the UK has no coauthors in the top 10 and one in the top 20 - at 20, and 7 in the top 50. Counting up the fractional contributions the UK contributes just under 6 ‘authors’ worth of papers to the top 100. Again this is about half what we might expect from the 13% metric. The overall picture is that the UK is not as good as Canada and a little bit better than Denmark.

This, however, is not a disgraceful showing at all - it rather shows - see the bar chart below - the near complete dominance of the USA in cutting edge synthetic biology. In this chart Scotland, Wales and Northern Ireland have no papers, so in this plot England is equivalent to the UK. To put the US dominance in perspective, three US institutions - Harvard, MIT and Howard Hughes individually outperform the UK.

We can also get a more qualitative, ‘coal face’ impression from a comment paper published in Nature Communications in 2020, reviewing ‘the second decade of synthetic biology’. It focussed on highlighting the biggest advances of the prior decade. This was done by UK Imperial College Professor Tom Ellis and his student Fankang Meng, aided by a sourcing of ideas, suggestions, and debate from the twitter synthetic biology community [link].

Of 27 ‘major advances’ in synthetic biology of the last decade outlined in their main figure below, only a single one appears to have a strong UK link, from Jason Chin’s lab at the Cambridge LMB10, a rate of 3.7%. This is based on our examination of the discoveries in consultation with domain experts.

Advances highlighted by Meng and Ellis, 2020, Nature Communications. Only one has a clear UK link.

The Meng/Ellis papers analysis was done by UK researchers, who may be being modest about the UK contribution.

Artificial Intelligence

Understanding UK performance in AI research is not easy. Research is much more influenced by industry, rapidly changing and often disseminated in conference papers rather than academic articles.

Given the difficulty of analysing the AI space, we collaborated with Zeta Alpha, a company that specialises in analysis of global AI trends. They conducted analysis of the years 2020-2022, where they have the cleanest data, and in which major changes in AI have occurred. The top 100 most cited papers for each year were counted, using the Zeta Alpha database of AI publications. If a paper has authors who belong to more than one organisation, it counts toward each organisation. To view their fuller analysis and methods, click here.

This analysis prima facie shows the UK coming in third for number of papers in the top 100 most cited, if Google owned and funded DeepMind is factored in. This is a strong performance, though a major gulf with the United States exists.

However, if DeepMind is removed, the UK is essentially neck and neck with Germany, Singapore, and Australia, with Canada and France close behind. None of their leading contributor organisations are tech giant owned labs.

Ie, the UK’s position at the forefront of AI appears to be largely driven by Google DeepMind.

The situation is particularly poor when we consider UK university research. Globally, 14 of the top 20 institutions by this ranking are Universities. However, none are from the UK. There are 4 UK Universities in the top 100 with a mean average position of 70, the highest ranked of which is Edinburgh at position 62. This is a poorer showing than Canada, which has 4 in the top 100 with mean average position of 53. Table 1 in Annex shows the data for this.

The data are based on paper counts. Ie, a paper ranked #100 and the top ranked paper would each count as one.

However, a different way of cutting the analysis worsens the situation further. If we look at the global % of citations amongst the top 100 papers, the UK position without Google/Deepmind collapses. It falls to 7th in the world, neck and neck with Hong Kong, and only marginally ahead of Switzerland, with just 1.86% of citations amongst the very most cited work published in the past 3 years.

We also consider publications in top conferences. We are grateful for access to some unpublished analysis by Juan Mateos-Garcia. Drawing on over 2 million AI publications between 2012 and 2021 from the OpenAlex website, which were filtered down to remove papers from education, linguistics, computer networking and neuroscience, we can get a picture of the national performance of the UK based on 1 million conference papers.

Conference acceptance is based on peer review by peers, so is a useful measure of what peers think is leading, though not necessarily the most significant, work.

As the next figure shows, this suggests the UK comes third, behind the US, which dominates, and China which is growing strongly.

As the next figures show, over time the UK position has remained fairly constant as Chinese research has grown in first quantity and then quality. The figure below breaks papers down in terms of citations, with the top decile being the 10% most cited papers.

If we look at the impact of this growth on the relative quality of UK AI research we see a pattern where UK research achieves around 6-7% of the top 10% most highly cited papers (a far less selective measure than the top 100 papers used above). However, since 2017 there has been a decline in the relative quality of UK research, as measured by these imperfect citation metrics, which is probably partly a reflection of the growth of high quality Chinese AI research noted above.

Lastly, to double check this emerging picture, we analysed the performance of computer science departments in producing international conference papers, based on dblp.org data. We only find a single UK University department in the top 50 departments, which is especially concerning as top talent use this to help identify where to study. Details are in the footnote 11.

Taken together, this data on the performance of UK AI research shows a comparable high level impression portrait as the Synthetic Biology data. The UK does well but not outstandingly, and it is particularly absent in the very most influential work over short-term time periods.

Quantum

Quantum is the third key technology in the UK Government’s Integrated Review. It is also difficult to assess, given the classified nature of military work, how backward-looking citations are biased against the explosion of recent research (where important work has come from the UK) and under-estimate the performance of China where we see growing quality and quantity driven by huge recent investments, with over $15 billion of chinese quantum investment, much larger than the EU and the US (though this likely excludes classified defence spend).12 Quantum is also a very broad research area, encompassing many quite different fields complicating analysis.

These caveats aside, when we look at national performance at producing highly cited papers the results are informative about current and future performance.

Looking at Web of Science ‘quantum’ non-review articles from 2013-2022, we find UK based coauthors on 20 of the top 100 papers, and 8 of the top 50, with 3 papers with UK coauthors in the top 10. This places the UK 3rd, behind the United States (47 papers), China (40 papers), and just ahead of Switzerland (17 papers) in its contribution of co-authors to the top 100 papers. The situation is similar with the top 50 and 20 papers.13 If we look at publications in the two leading journals - Nature and Science - in the last 10 years the UK has co-authors on 239 of 1567 articles.14 This is roughly a fifth of the performance of the US. However, this is only half the performance of Germany, and not very far in front of Switzerland. The Swiss performance is remarkable as it has a 10th of the UK population, but matches the UK performance in the top 10 and top 50 publications in Science and Nature. Concerningly the UK as a whole is outperformed on this last metric by two US institutions - MIT and Harvard.

If we look at the fractional contribution of authors with UK addresses to papers from the past decade - on a generous count that assumes papers with a majority of UK authors or papers led from the UK are pure UK papers, the UK produces about 1 and a third of the top 20 papers, just under 3 of the top 50, and about 9-10% of the top 100. However, the results fluctuated between different databases (which may reflect different definitions of review articles etc), we have chosen the number which is the most generous reading of the data we could find with regards to UK performance. This approach gives a good performance, but is still less than we would expect from the ‘UK produces 13% of highly cited papers’ measure.

Given the major changes over time in this area, we analysed over a range of time scales. If we look at trends over time, then we can see periods of time when the UK was a quantum science superpower. Between 2003 and 2007 for example, the UK was second in terms of contributions to the top 100 most highly cited papers, with UK co-authors on 13 of the top 100, 9 of the top 50, and three of the top 10, including the 1st and 3rd most highly cited.

Looking forwards to subsequent 5 year timespans, the UK drops to number 4 (2008-12), then rises to number 3 (with three in the top 10, including the top cited paper) (2013-2017) and then drops to number 5 in the last five years (2018-2022), with no papers in the top 10 with UK coauthors. In terms of fractional contributions UK authors add just over 3 papers to the top 50, or 6%.

The overall picture is of good but not outstanding current UK performance. Based on our analysis, this looks like the area where the UK performs strongest. However, there are significant difficulties in interpreting actual capability in such defence-relevant work, and a science superpower the size of the UK would not expect to be performing at a level very close to Switzerland, with its much smaller population.

Making sense of these results

The data we have presented overall is not consistent with the UK being a science superpower, and the direction of travel suggests a declining relative performance as other nations improve. We have only looked at the three areas identified in the Integrated Review as areas of UK strength and future geopolitical importance. We find the UK performs well, but not outstandingly, in each area, with particular gaps in the most highly cited work. We are roughly comparable to Germany, whilst sometimes being outperformed by much smaller countries such as Singapore and Switzerland. In every research area looked at, single institutions such as MIT or DeepMind match or exceed the entire UK’s academic output. In terms of the highest performing science, the UK contribution rarely exceeds half of the ‘13% of the top cited papers’ statistic commonly used, and is less than a quarter of that by some measures.

We are not suggesting the UK performance is bad. In fact, we are impressed by how well the UK research system has performed given the rise of China, and the low levels of funding it receives by international standards. We outperform France which spends slightly more on HERD (Higher Education funded R&D), and in some cases Germany, which spends significantly more. This shows that research performance isn’t just a matter of inputs, there are major differences across nations in how efficiently their science systems generate outputs. Our worry is that we are in some cases being outperformed by smaller nations with much lower spending. They seem to be funding research and organising research more effectively and as a result generating higher quality outputs.

One clear conclusion we draw from this is that the UK needs to benchmark itself against the very best - which in these instances is typically the United States’ best ecosystems, and the high performing smaller nations such as Switzerland, Singapore and Denmark. Being near the front of the pack of large European nations can give a false sense of strong performance. We also note that much of the US’ leading advances appear clustered in a relatively small number of locations. The UK also needs to focus beyond the ‘top 1% of papers’ metric, and look at fractional contributions. This will help focus on cutting edge research and avoid badging what are sometimes overwhelmingly overseas advances as UK papers due to having a small number of UK co-authors. We saw this enough to think it is artificially inflating UK performance substantially.

Our analysis has some significant limitations - the most impactful papers in the long-term are sometimes not immediately recognised when they are published. As Max Planck said, ‘"Of course, it took a number of years until the physics community took notice of my theory. Because initially it was misunderstood by many people and was completely ignored, as it is often the case with such new things."’ [source]. Citation metrics have other well known limitations as measures of quality. For example, there is evidence that non-replicable papers are more highly cited than replicable ones15. The search criteria used to filter data here are also not clean and our data is somewhat messy and preliminary.

However, even with the strong caveat that highly cited papers are far from the full story, we think there are reasons for concern. We would expect a science superpower to be more reliably present in the very most highly cited work. Though we only closely analysed the three technology families the UK government has stated are of vital strategic importance, we have little reason to suspect the picture is notably different in other areas of technology-intensive research.

This is worrying because a lack of cutting edge research causes wider problems. For example, the UK might lack a pool of the very best researchers to peer review work. It means government advisory boards in Synthetic Biology and AI may lack people at the international cutting edge. It reduces the attractiveness of the UK to the top global talent who want to be trained in labs at the frontier of knowledge. These all create vicious cycles and, over time, the prestige of the UK as a place to do science is likely to decline. Whilst we cite our continued success in collecting Nobel prizes, these are typically given for work done many decades ago before the recent major changes in the global research landscape.

The UK research system is strong in many areas. It has been optimised for volume production, like a factory, with everyone having to publish papers at regular intervals. This drive to produce a high enough number of papers that can pass some quality threshold contrasts with the management of science in other nations, where there is more focus on long term support and the ability of researchers to explore the unknown. See for example this interesting interview with a top Swiss scientist. We suggest there is a need to reassess whether the UK has the right model. Further, for reasons we will explore in later posts, the UK system does not appear to prioritise the use of new technology and engineering in its basic research system.

Some key recommendations we think should be pursued:

The UK needs to rely on diverse and different approaches to assess its performance, including international esteem and peer opinion, and focus more on its ability to produce occasional very high impact work, rather than bulk measures of overall output.

The UK should consider long term ‘teams and people’ not short term projects funding, giving researchers greater freedom. This is explored by Peter Lawrence, who highlights in particular how bad the current system is for junior researchers [link to paper].

The UK should prioritise giving substantial research resources to top research teams with strong technical and engineering support, over prioritising volume of grant numbers, to ensure UK researchers can compete in technology intensive fields. Quality, not quantity, is key.

The UK should increase the ability of more junior researchers to create new paradigms, develop new ideas and conduct and lead their own research programs. The fields studied here have changed dramatically in the past 10 years, but sometimes the stock of Professors is relatively unrefreshed. Particularly in AI, an old generation of researchers from previous paradigms remain in control (DeepMind aside). For example, the University of Cambridge recent AI strategy makes no mention of the major advances in AI in recent years, has no mention of AGI, or even of Large Language Models.

We should look to learn from the institutions that are consistently at the cutting edge. Notably, both of the standout performers in the UK - the Cambridge LMB in SynBio and DeepMind in AI, have a very different model for organising, funding, and incentivising researchers than the large majority of public R&D efforts, as highlighted previously.

We also want to make clear we are not suggesting that we directly incentivise for very highly cited papers - this would be to repeat the managerialism and micromanagement mistake that we suspect has led to this situation. Rather, focus should be on the inputs to the R&D system, and placing greater trust in delivery organisations to produce unique and distinctive environments.

Our analysis is preliminary, and we strongly welcome critiques and challenges. Our intention is to start a debate, rather than provide the definitive final statement on the state of UK research.

As someone with long term policy expertise who reviewed this post said to us in their comments:

“The trouble with your paper is that it leads to a terrible hypothesis: the UK’s science and innovation funding system has dragged people towards a gravitational centre where people pursue things that are of little consequence to either academia or society. We have lost the ability to produce genuinely beautiful and creative breakthroughs which transform our understanding - and we have lost the ability to connect our knowledge and expertise up with the people and organisations that can most effectively exploit it for our collective benefit.”

In conclusion our analysis suggests that we should start to question claims that the UK is a science and technology superpower, and not rely on what may be misleading and atypical metrics. Whilst we do well, we are not outstanding, and we should think about how much we invest into the science system, and perhaps more importantly how we are funding science and start to evaluate the relative performance of different institutions and approaches to funding. There are big differences across nations, areas, institutions and funding approaches, and we need to ensure we are using the best, not simply continuing with what we have because we have it.

Our aim here is to open up a debate about how the UK performs and what we should do.

Erratum correction 16th May: An earlier version said there was 1 only university in the top 100 in table for AI. It is 4. This has been changed to ‘There are 4 UK Universities in the top 100 with a mean average position of 70, the highest ranked of which is Edinburgh at position 62. This is a poorer showing than Canada, which has 4 in the top 100 with mean average position of 53.’

Endnotes

1 It excelled in rapid and high quality clinical trials of known technology such as testing dexamethasone, but the cutting edge mRNA, antibody, and other treatments that were ultimately used were all developed abroad, with the UK’s success relying on relatively old AAV technology for the Oxford-AZ vaccine. This is not to dismiss the amazing work academia, the UK biotech industry and AZ that went into that went into developing, testing, scaling up and distributing the vaccine around the world - something that we all should be proud of. Only to highlight that the most advanced work in vaccines was done and is being done outside the UK.

2 Gov UK - International comparison of the UK research base, 2022, Accompanying note

3 https://www.nature.com/articles/s41586-022-05543-x

4 As papers are not clearly categorised into topics, and key search terms such as quantum or synthetic biology can sometimes return papers which have no relevance to the topic, and miss papers that are relevant. However, looking at smaller numbers of papers makes ensuring the data is ‘clean’ easier. Altogether, looking across many cuts of the data, we think we show a relatively consistent picture.

All search terms are done for the last ten years to give a larger sample, unless stated otherwise, and we double check our analysis using both Web of Science and Scopus. Given the dramatic rise of China during the last ten years, and our methods bias against newer papers that have had less time to pick up citations, our analysis may significantly overestimate current UK performance.

5 This subject goes via several names, from the international name ‘synthetic biology’ to the ‘engineering biology’ phrase used in some UK government documents, which is a somewhat broader concept but is not a term used as much internationally.

6 We have 1 paper in the top 10 with at least one UK based coauthor amongst all the authors in a paper, 2 in the top 20, 9 in the top 50 and 22 in the top 100.

7 The top UK paper has 3 of 18 authors as UK based, the second 4 of 22, the third 3 of 20, the fourth 2 of 25, the fifth is 2 of 5 (on a survey), etc.

8 To double check this result, and address a concern that the search term ‘synthetic biology’ may be too crude, we used another broader search term developed by Raimbault (2016), Mapping the Emergence of Synthetic Biology. PLoS ONE 11(9): e0161522. doi:10.1371/journal.pone. This gives us the same results - 1 paper in the top 10 (at number 10), 2 in the top 20, etc.

9 39 of these highly cited are in Science, Nature or Cell. These are the top three journals for publishing cutting edge research. (Note because this is a broad category, a lot of highly cited papers are review papers). So its important to look at cutting edge research.

10 The main figure does not individually cite papers underlying the 27 advances, but three synthetic biologists we asked confirmed that the only one they thought had a major UK link was the e.coli genome synthesis paper from Jason Chin’s lab, which matches our assessment. Looking at the citations the paper does make, it shows only a single UK paper listed as a major advance, the paper Chin’s laboratory at the Cambridge LMB.

Gibson, D. G. et al. Creation of a bacterial cell controlled by a chemically synthesized genome. Science 329, 52–56 (2010). [all at US Venter institute]

Kwok, R. Five hard truths for synthetic biology. Nature 463, 288–290 (2010). [Freelance writer, San Francisco area]

Wang, B., Kitney, R. I., Joly, N. & Buck, M. Engineering modular andorthogonal genetic logic gates for robust digital-like synthetic biology. Nat.Commun. 2, 508 (2011). [all UK authors, though its cited as ‘one of the first synthetic biology papers in Nature Communications ‘, the journal the Ellis review is published in which was new in 2011, and is a reference to teething problems in the field rather than as a major new invention, so this isn’t really a fair reference to count toward the total]

Nielsen, A. A. K. et al. Genetic circuit design automation. Science 352, aac7341(2016). [all authors US based, most in Boston]

Lajoie, M. J. et al. Genomically recoded organisms expand biological functions.Science 342, 357–360 (2013). [all US, almost all Boston/Yale]

Qi, L. S. et al. Repurposing CRISPR as an RNA-guided platform for sequence-specific control of gene expression. Cell 152, 1173–1183 (2013). [all US authors]

Cambray, G., Guimaraes, J. C. & Arkin, A. P. Evaluation of 244,000 synthetic sequences reveals design principles to optimize translation in Escherichia coli.Nat. Biotechnol. 36, 1005–1015 (2018). [all US based, one has a joint position in France]

Ng, A. H. et al. Modular and tunable biological feedback control using a de novo protein switch. Nature 572, 265–269 (2019). [all US based]

Karr, J. R. et al. A whole-cell computational model predicts phenotype fromgenotype. Cell 150, 389–401 (2012). [all US based]

Hutchison, C. A. 3rd et al. Design and synthesis of a minimal bacterialgenome. Science 351, aad6253 (2016). [all US based]

Richardson, S. M. et al. Design of a synthetic yeast genome. Science 355,1040–1044 (2017). [all US based]

Fredens, J. et al. Total synthesis of Escherichia coli with a recoded genome.Nature 569, 514–518 (2019). [Largely a UK based paper - Chin lab]

Pardee, K. et al. Paper-based synthetic gene networks. Cell 159, 940–954 (2014). [all US based]

Tinafar, A., Jaenes, K. & Pardee, K. Synthetic biology goes cell-free. BMC Biol 17, 64 (2019). [canada, this is a review not research]

Casini, A. et al. A pressure test to make 10 molecules in 90 days: external evaluation of methods to engineer biology. J. Am. Chem. Soc. 140, 4302–4316 (2018). [boston, a piece on a test of their biofoundry]

11 This information has been gathered at csrankings.org based on the 6,475,000 computer science outputs captured at https://dblp.org/, and covers University Departments. This is an important source of information for prospective PhD students and is used by international students to decide which departments they will apply to. When we look at the performance of international departments in computer science, focusing on AI, we find the UK has no departments in the top 30, and only 1 (Edinburgh) in the top 50 at number 36, and 5 in the top 100 (We have 5 in the top 100 (Edinburgh 36, Oxford, 53, Imperial, 65, UCL 78 and Surrey at 92). If we look at the narrower category of AI within AI the UK performance improves and we have 7 in the top 100 (Oxford 13, Imperial 45, Liverpool 46, Southampton, 61, Edinburgh 91 and Kings 93). The rankings are now dominated by China that has 8 of the top 10 departments (Singapore has the other two).. Given the concern about the relative decline in the performance of AI research from the UK in recent years, highlighted above, we can look at more recent performance from 2019 to 2022, which gives a noisier but comparable picture of a single institute in the top 50. This introduces more noise into the data, but gives an informative picture. The top UK department is Edinburgh at 34. It is the only UK department in the top 50. In total we have 5 departments in the top 100 (Oxford 54, Imperial 63, UCL, 77; and Surrey at 92).

13 If we look at the most highly cited 50 papers the UK is joined by Germany and Switzerland with 10 papers each.

14 If we focus on publications in the very top 4 journals - Nature, Physical Review Letters, Science and Nature Communication (547 papers in total on Quantum in the previous decade), the data shows a reasonable UK performance. The USA dominates and has authors on 301 papers, compared to 116 for China, 115 for Germany and 93 for the UK. Followed by Japan (61), France (53), Switzerland (42), Canada (41), Spain (37) and Australia (31).

15 “Nonreplicable publications are cited more than replicable ones” Science Advances, Marta Serra-Garcia and Uri Gneezy 2021